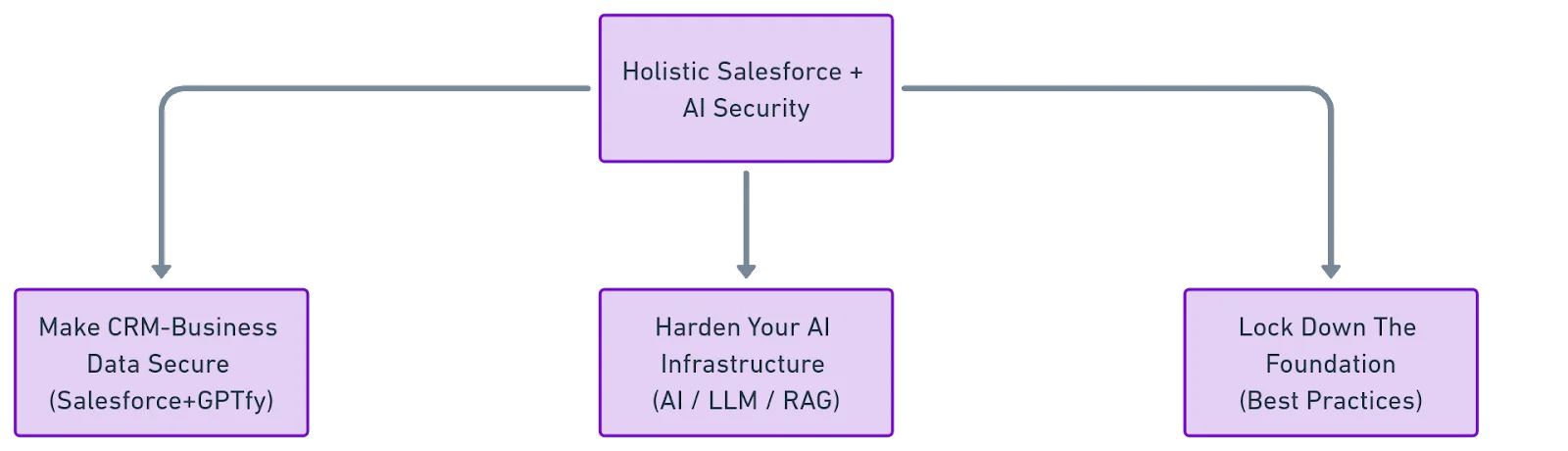

AI Holistic Security

What?

This plain English article provides a set of best practices for securely implementing GPTfy with Salesforce CRM. It’s a comprehensive and integrated approach to securing AI systems and their associated data, models, and infrastructure.

Who?

Salesforce Admins, Business Analysts, Architects, Product Owners, and anyone who wants to tap their Salesforce + artificial intelligence (AI) to its full potential.

Why?

Prevent data breaches. Ensure privacy & compliance. Maintain trust.

Help Salesforce users harness the power of AI to improve their CRM operations without compromising on security.

What can you do with it?

Secure CRM Data

Implement data protection strategies, including data extraction controls, information masking, and automatic deletion protocols to safeguard your data.

Strengthen AI Infrastructure

Enhance AI integration security by introducing stringent access controls, ensuring no data retention post-processing, and securing AI interactions.

What can you expect?

To develop a security-first approach with AI, using secure prompts, clear interaction guidelines, minimal data exposure, and data masking techniques in non-production environments to protect sensitive information from third parties.

By mastering these three pillars, you can confidently unleash the power of AI in Salesforce, knowing your data remains safe and secure.

1. Make CRM-Business Data Secure: Data is King, Protect it Well

Why it matters

Imagine a data breach exposing customer PII or confidential business information. Nightmare, right? Secure data handling in Salesforce + GPTfy minimizes this risk for your AI project.

What you can do

Secure Data Extraction

Ensure all data extraction happens within the Salesforce platform, respecting user visibility and business rules.

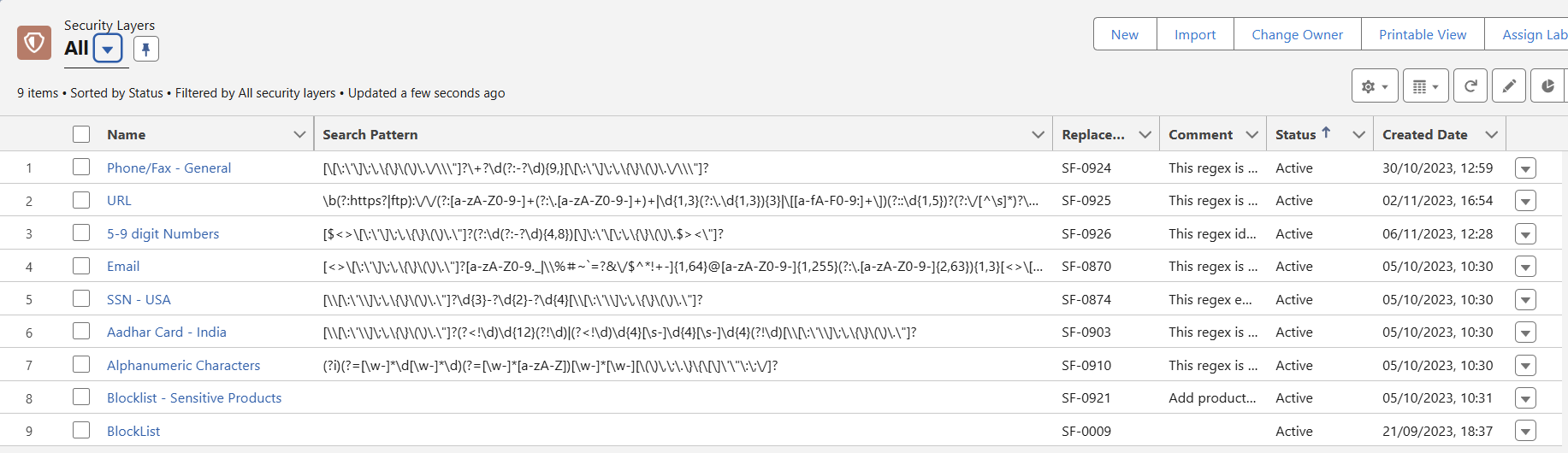

Apply Multi-layered Masking

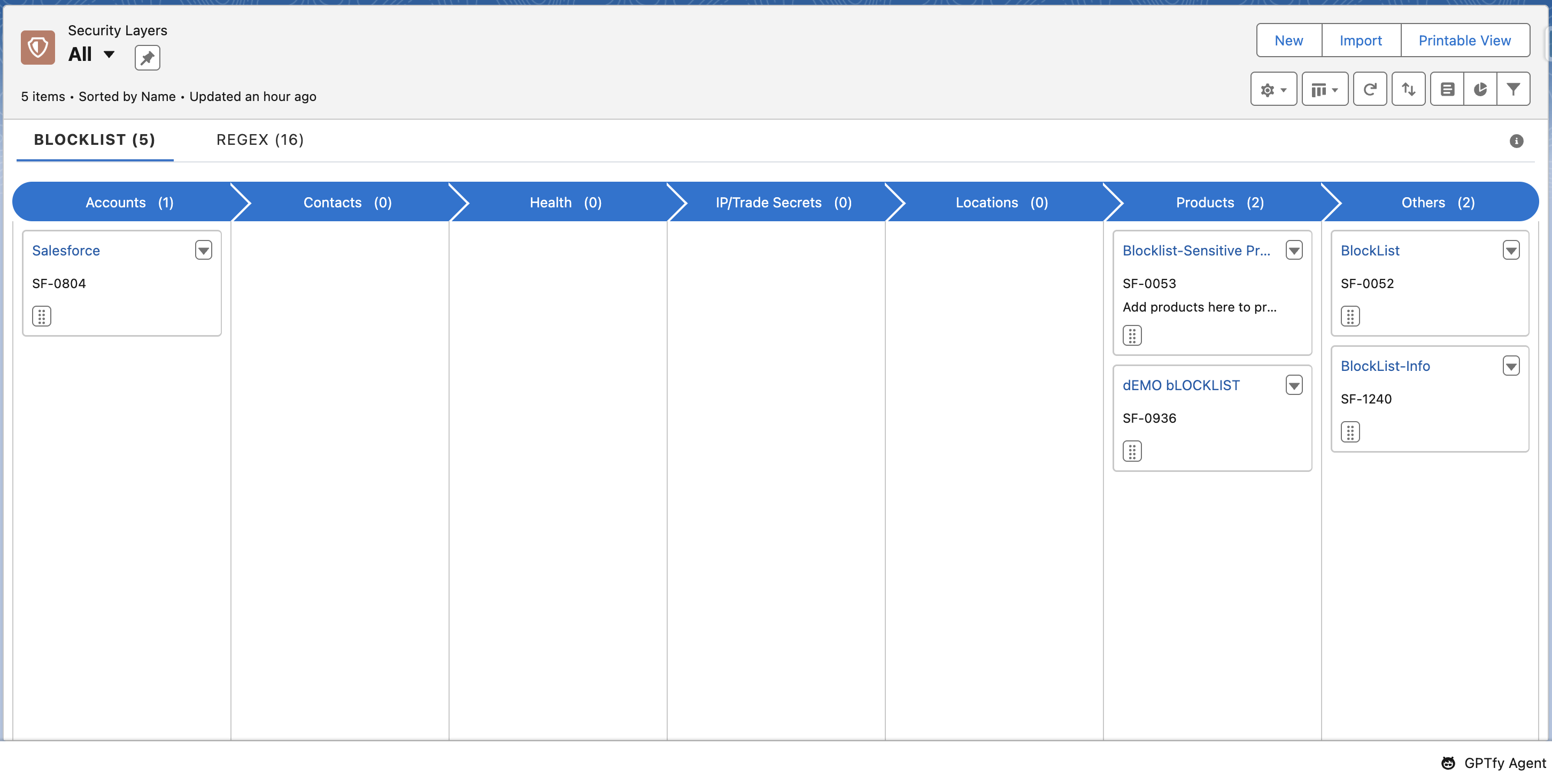

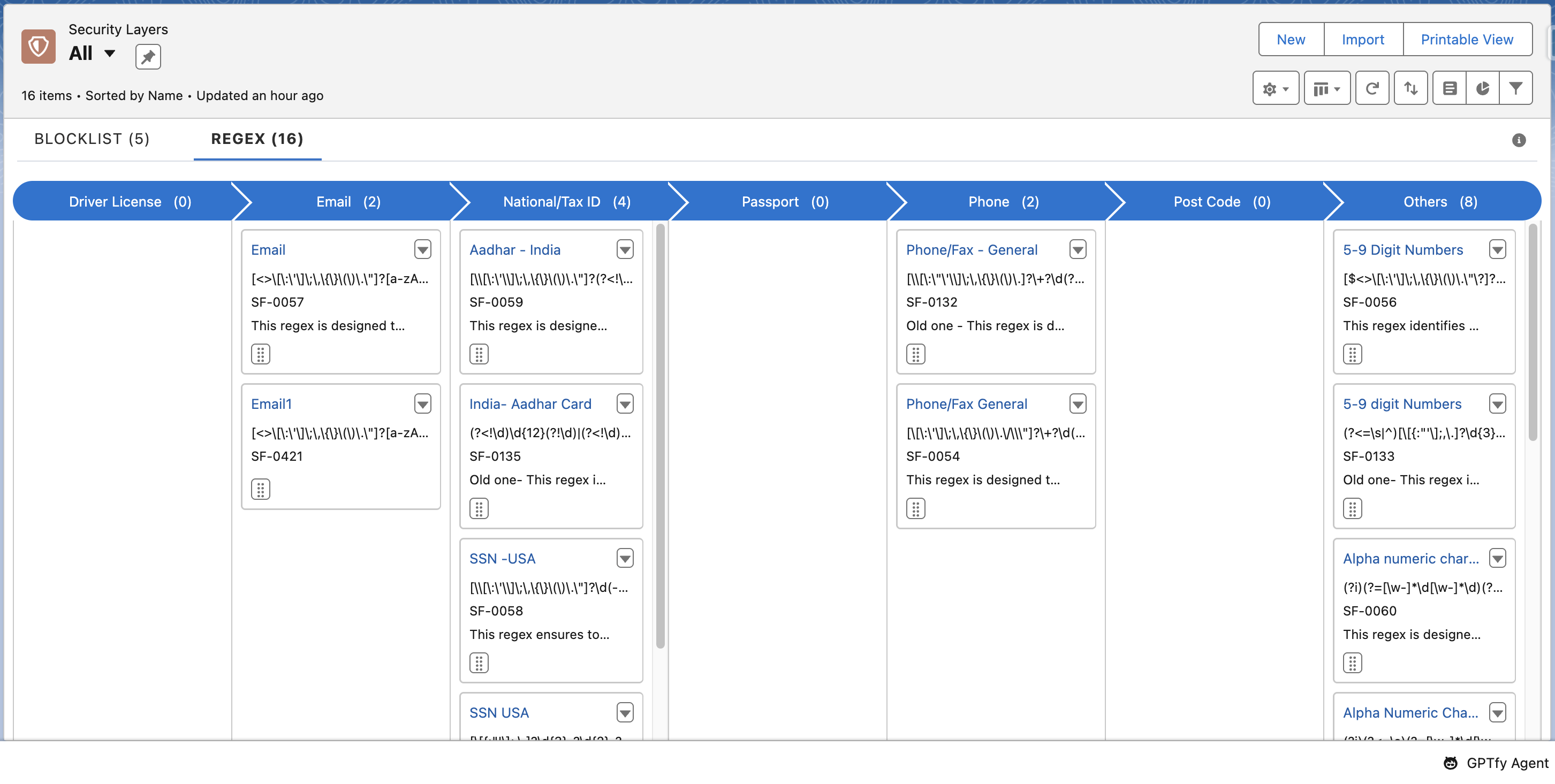

Leverage GPTfy’s multi-layered masking to hide sensitive data like PII and PHI. Combine field-level masking with pattern recognition (via Regex) and term blocking for comprehensive protection.

Blocklist: Allows you to specify a list of values to be anonymized (e.g., product names).

Regex: Uses Regular Expression (Regex) patterns for common data types (Email, Phone, SSN, locale-specific variants, etc.)

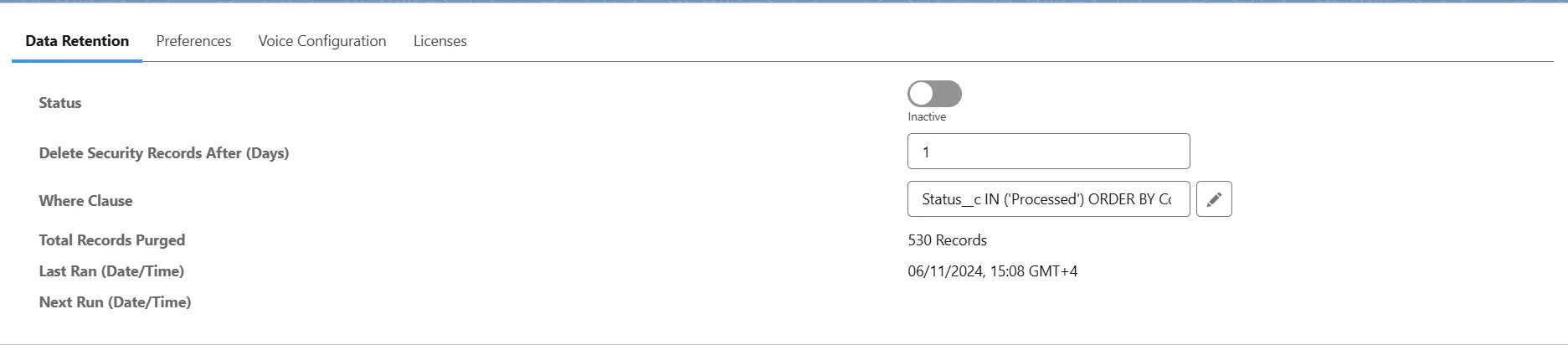

Automated AI Data Retention

Configure GPTfy to delete AI response security audits automatically, based on your data retention policies, preventing unnecessary and potentially risky data accumulation.

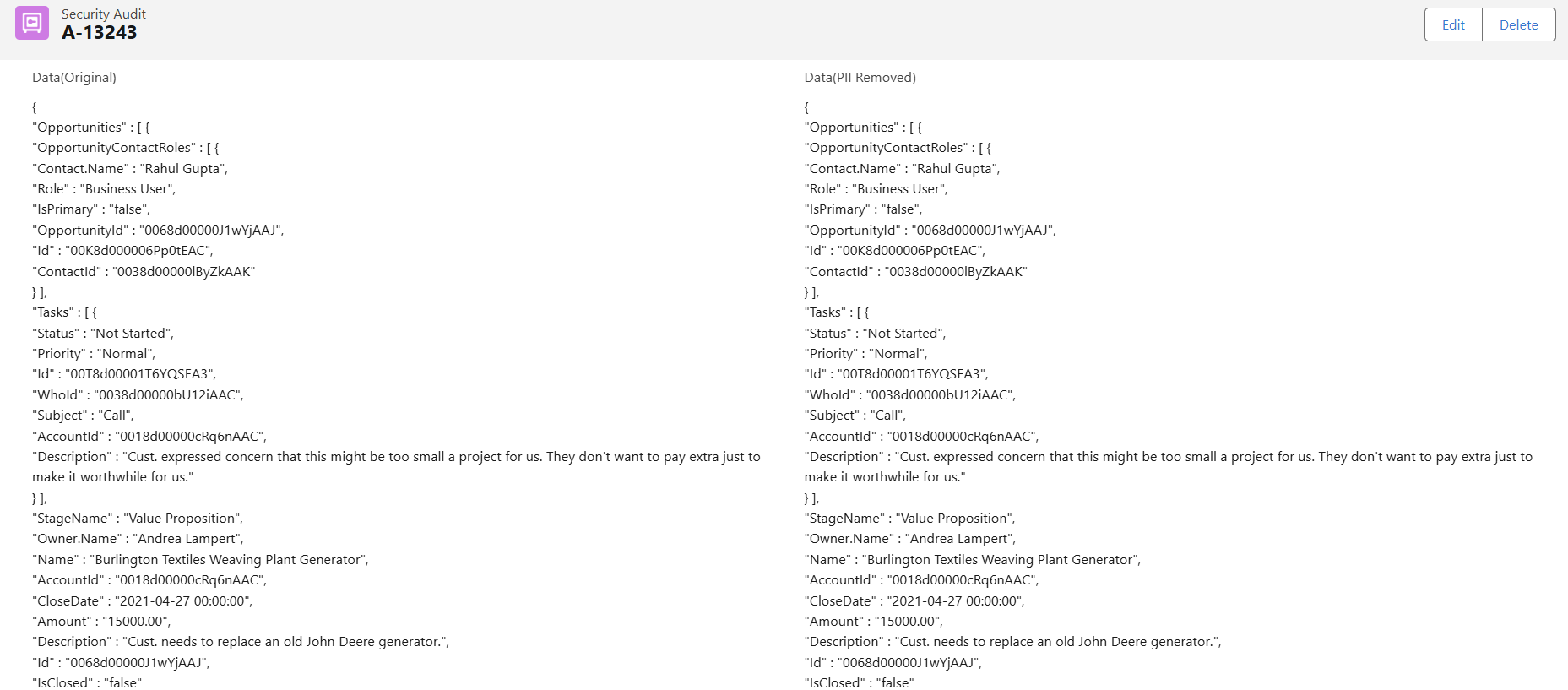

AI Response Auditing

Regularly review AI response security audits to validate data masking effectiveness and identify suspicious responses. Consider programmatic logic for enhanced vigilance.

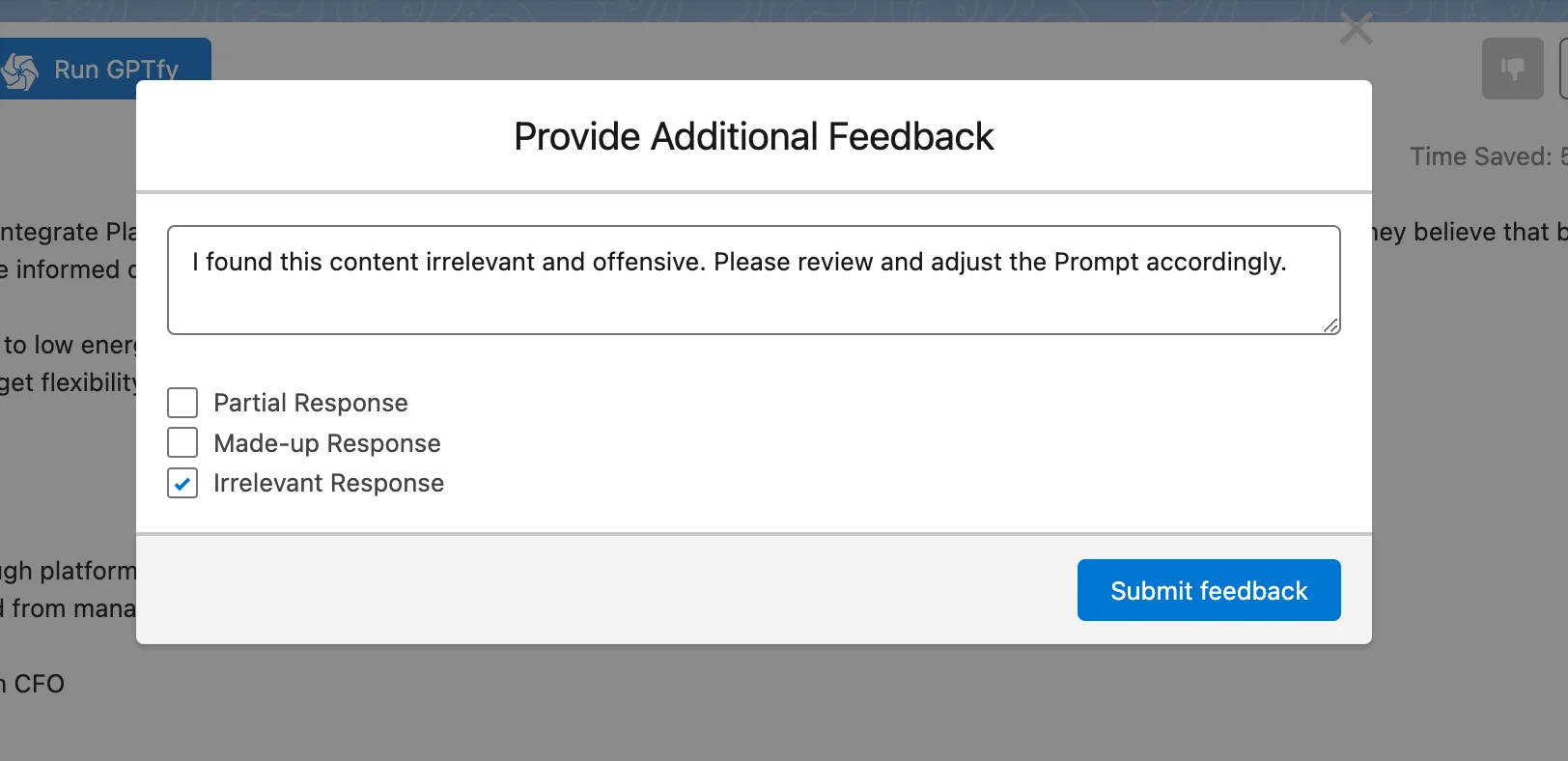

Collect User Feedback for RLHF

RLHF (Reinforcement Learning from Human Feedback) engages users in providing feedback on AI performance. Their input strengthens data security and drives continuous AI model improvement.

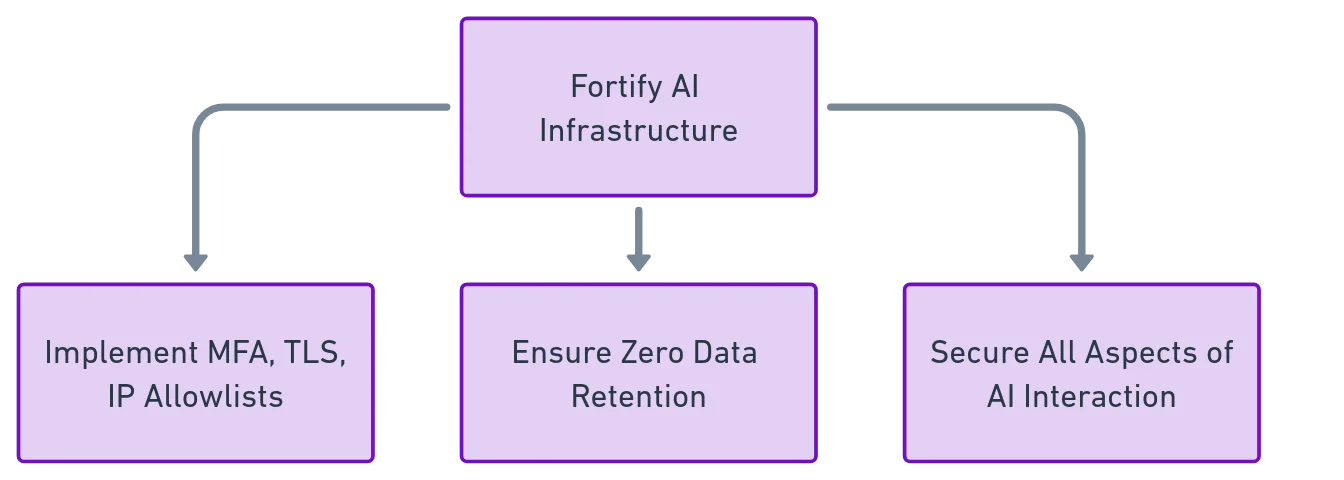

2. Harden Your AI Infrastructure: Build an Impregnable Wall

Why it matters

Think of your AI infrastructure as the castle protecting your data. Make it impenetrable!

What you can do

Integrate Security

Implement this, along with Transport Layer Security (TLS) and IP allowlists, to control access to AI resources.

Zero Data Retention

Ensure no customer data lingers on the AI infrastructure post-processing. This guarantees privacy and compliance.

Lock it Down Tight

Secure all aspects, from writing secure prompts and applying AI interaction guidelines to limiting CRM data exposure and masking data within AI sandboxes. Every detail matters!

Below are three sets of best practices by industry leaders

Amazon Web Service (AWS)

-

Security Best Practices for Machine Learning

Emphasizes secure coding practices for model development, data encryption at rest and in transit, and continuous anomaly monitoring.

Read more: AWS Security Pillar Best Practices -

AWS WAF for AI Model Protection

Covers protection from attacks like adversarial inputs and SQL injection.

Read more: AWS WAF for Model Protection

Azure

-

Security Best Practices for Machine Learning

Outlines secure coding, data handling, and model monitoring.

Read more: Azure ML Security Best Practices -

Responsible AI in Azure Machine Learning

Emphasizes fairness, accountability, and transparency in AI development.

Read more: Responsible AI in Azure

Google Cloud

-

Best Practices for Machine Learning

Includes data governance, adversarial training, and explainability.

Read more: Google Cloud ML Best Practices -

Cloud Data Loss Prevention (DLP)

Helps prevent sensitive data exposure in AI pipelines and sandboxes.

Read more: Google Cloud DLP

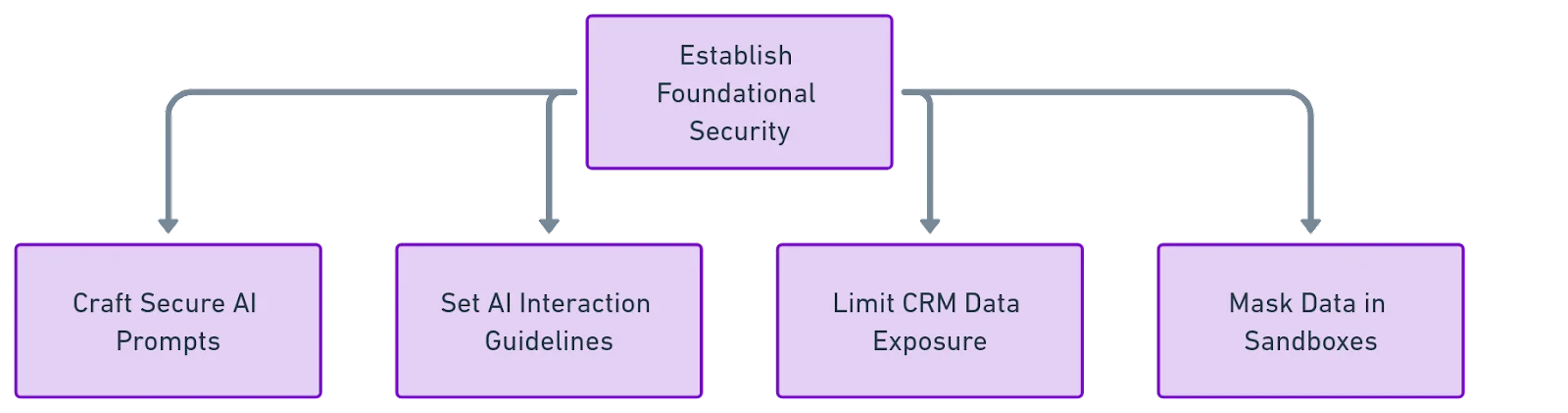

3. Lock Down the Foundation: The Bedrock of Trust

Why it matters

Security measures go beyond data and infrastructure. Think of it as the overall security culture supporting your AI journey.

What you can do

Craft Secure Prompts

Design AI prompts without revealing sensitive information or generating insecure content.

Set Ground Rules

Establish clear guidelines for AI interactions, ensuring adherence to security and privacy standards.

Limit CRM Data Exposure

Limit the amount of CRM data fed into AI models to what’s strictly necessary for the task at hand. Less data, less risk!

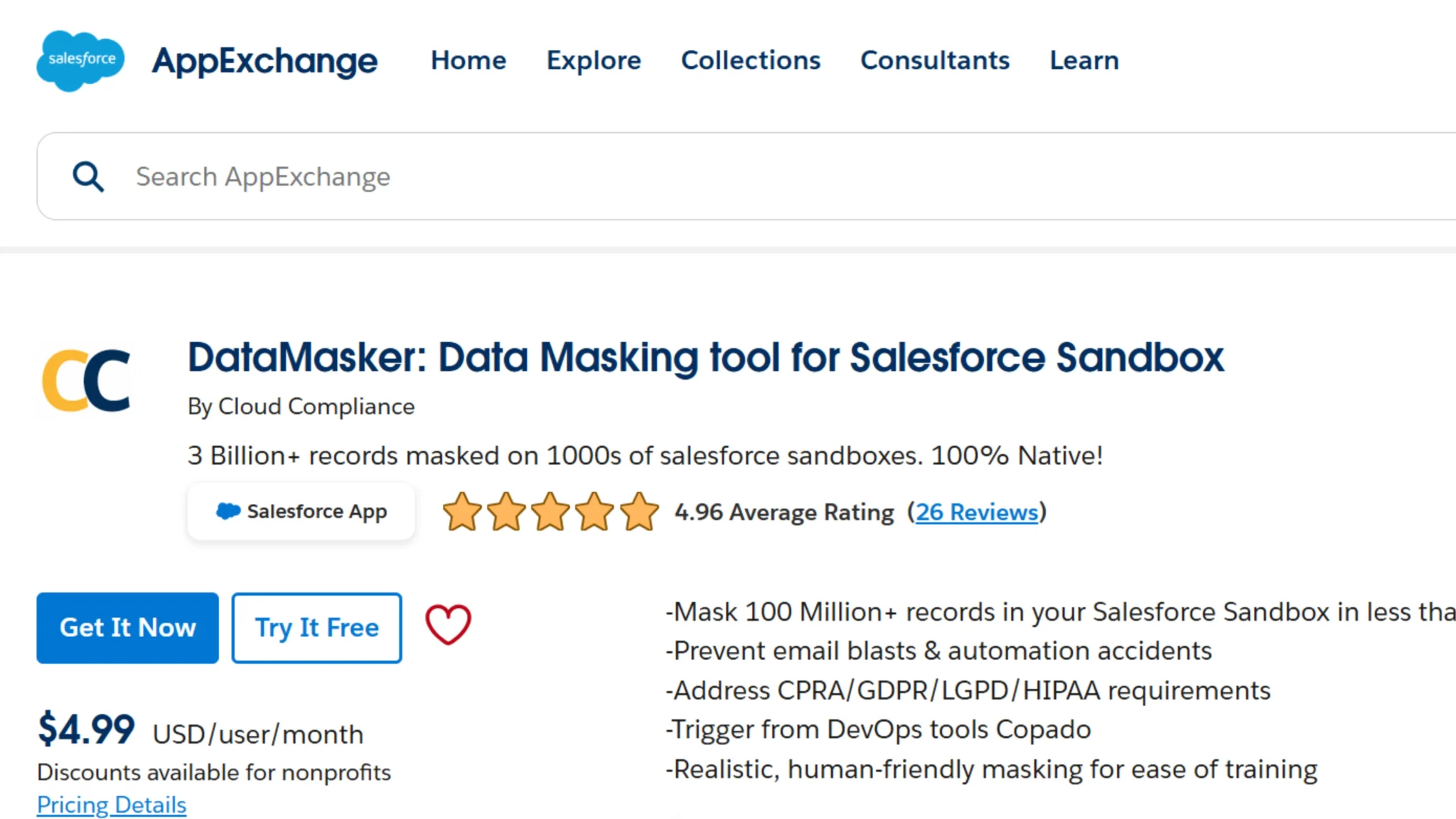

Mask Data in Sandboxes

Ensure development and testing environments (sandboxes) are free from sensitive data. Use masking techniques to protect information even in non-production settings.

Conclusion

When unlocking the potential of AI in Salesforce with security as your top priority, GPTfy is a game-changer.

By following these best practices, you can confidently leverage the power of AI while ensuring your data remains safe and secure.

Remember, GPTfy offers robust security features that, when combined with these practices, create an impenetrable shield for your Salesforce and AI integration.

Embrace the future of AI, and do it securely!